Shaping the Future of Technology

Electrical and computer engineering extends from the nanoscale level of integrated electronics to gigantic power grids; from single-transistor devices to networks comprising a billion nodes. Our students discover and apply new technologies and innovations in our three nationally-ranked academic degree programs.

Our Programs

- Minor

- B.S.

- M.Eng.On Campus

- M.Eng.Distance Learning

- M.S.

- Ph.D.

-

Electrical and Computer Engineering

Focuses on developing electrical systems, from circuits to computers. Great for those interested in hardware, software, and advancing technology.

Strategic Areas of Research

-

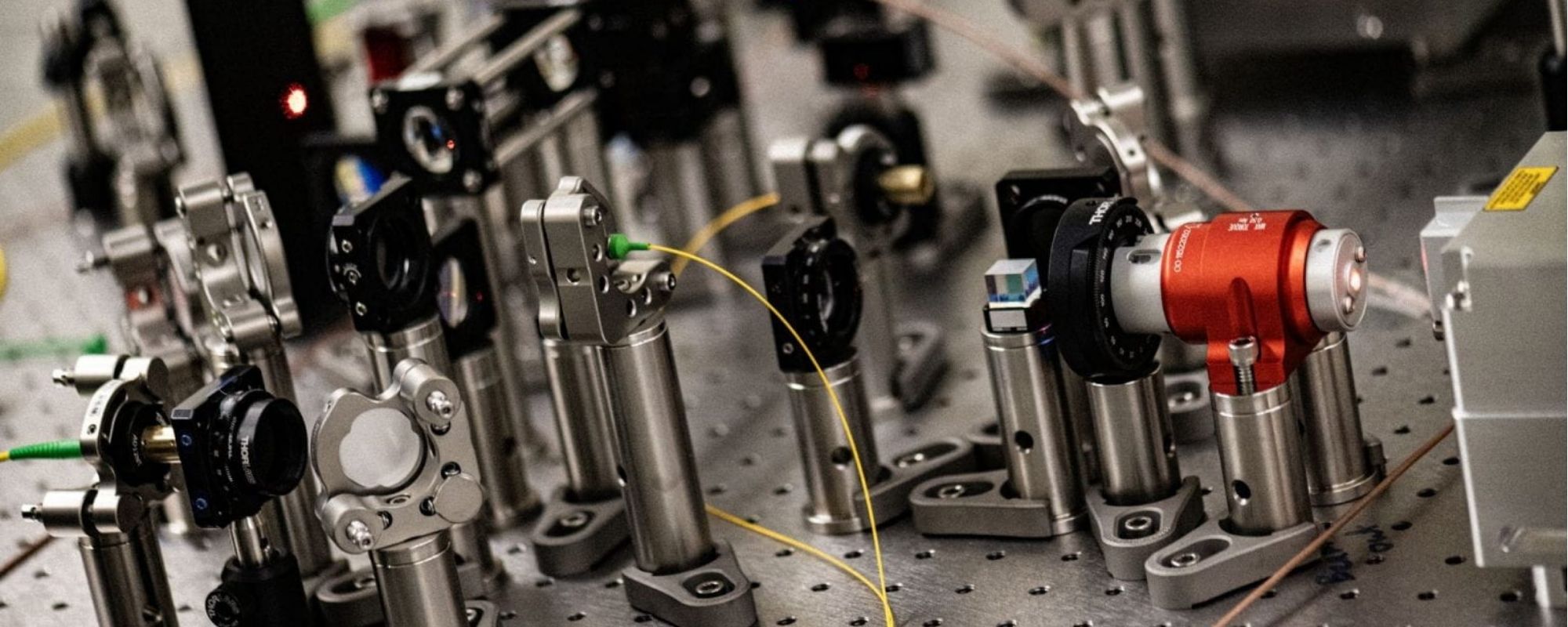

![Karan Mehta's Photonics and Quantum Electronics Group lab equipment]()

Bio-Electrical Engineering

Interfaces for sensing and actuation to help understand the physiological and pathological mechanisms of diseases, and enable advanced robotic interfaces in medicine.

-

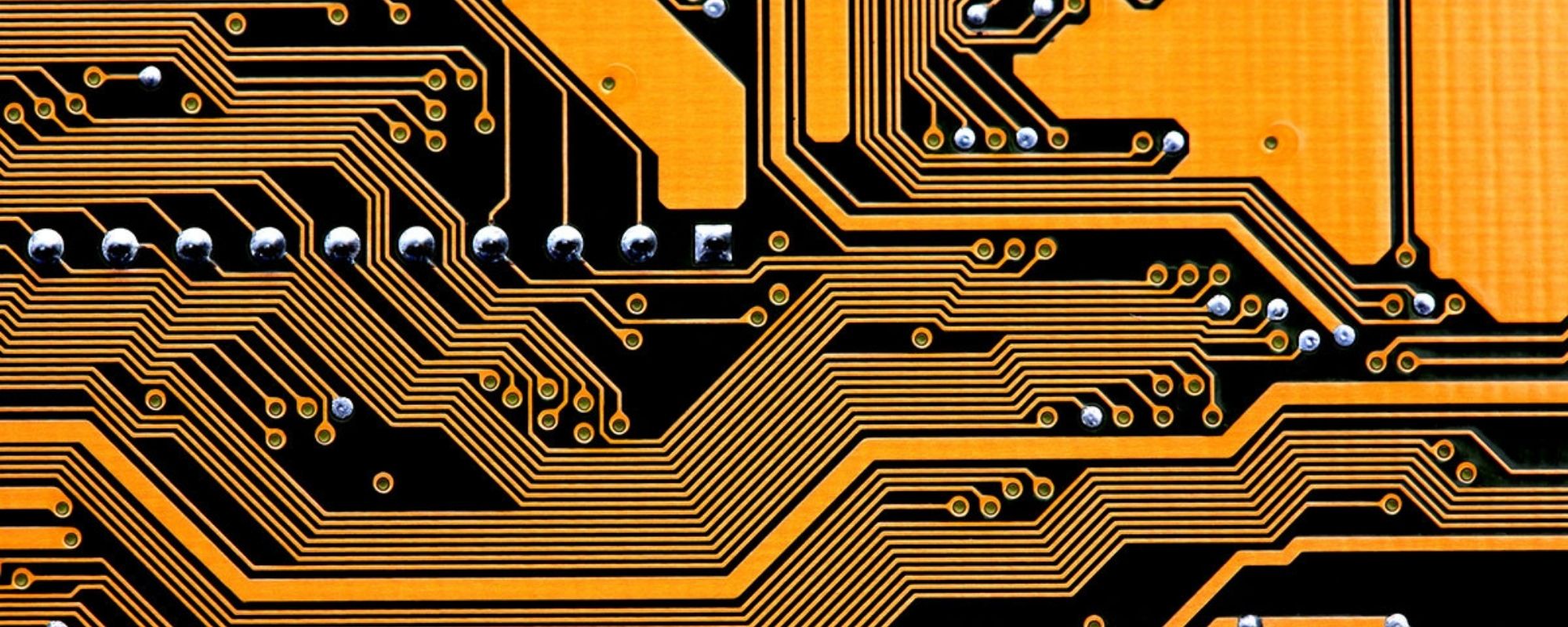

![Circuit board]()

Circuits and Electronic Systems

Analog and mixed signal circuits, RF transceivers, low power interfaces, power electronics and wireless power transfer, and many others.

-

![Blockchain graphic from the Statistical Signal Processing Lab of Vikram Krishnamurthy]()

Computer Engineering

Digital logic and VLSI design, computer architecture and organization, embedded systems and Internet of things, virtualization and operating systems, code generation and optimization, computer networks and data centers, electronic design automation or robotics.

-

![A drone in a strawberry field working on pollination. in Kirsten Petersen's lab]()

Information, Networks, and Decision Systems

The advancement of research and education in the information, learning, network and decision sciences.

-

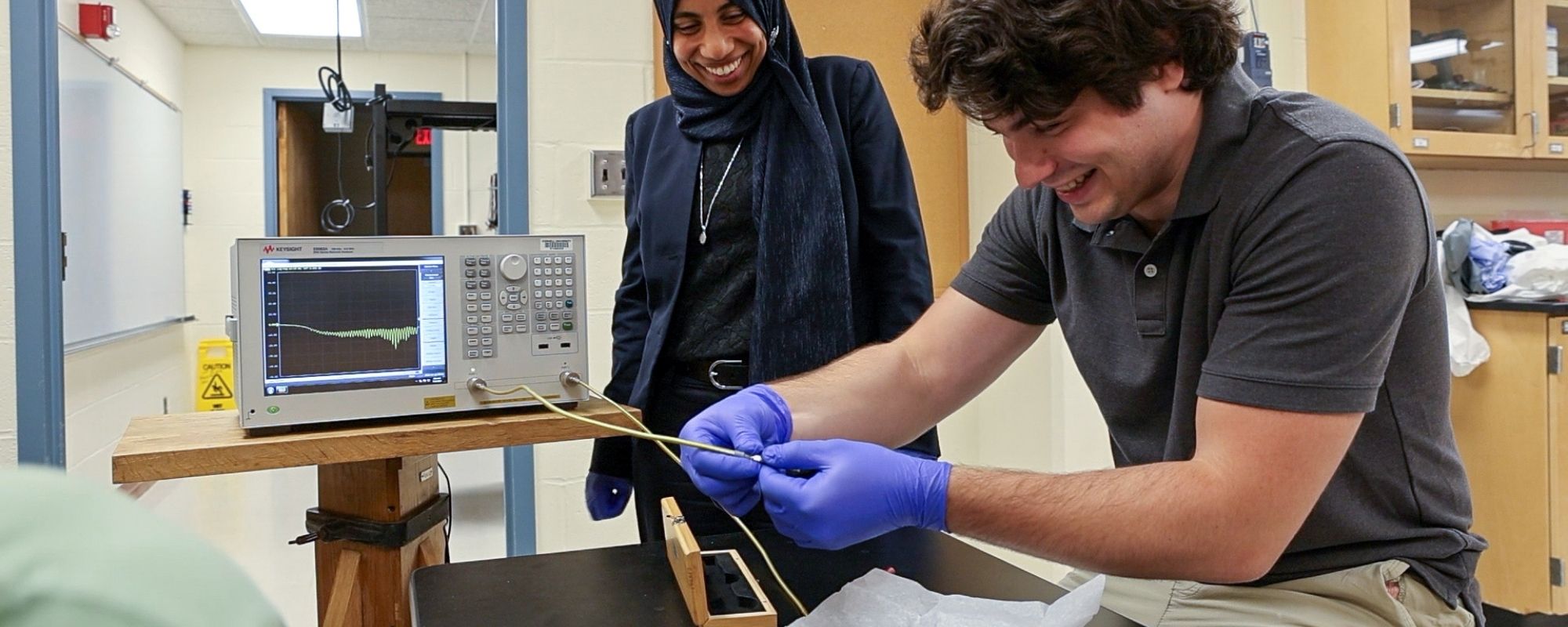

![Amal El-Ghazaly working with a student in her lab on magnetic sensors]()

Physical Electronics, Devices, and Plasma Science

Electronic and optical devices and materials, micro-electromechanical systems, acoustic and optical sensing and imaging, quantum control of individual atoms near absolute zero temperature, and experiments on high-energy plasmas at temperatures close to those at the center of the sun.

-

![Student showcasing their robot during the annual Electrical and Computer Engineering Robotics Showcase event]()

Robotics and Autonomy

Topics include swarm intelligence, embodied intelligence, autonomous construction, bio-cyber physical systems, human-swarm interaction, and soft robots.

News Highlights

-

Historic $100 million investment to expand Engineering’s Duffield Hall

The commitment, the largest in Cornell Engineering’s history, from David A. Duffield ’62, MBA ’64, will significantly expand the college’s existing Duffield Hall, creating a new state-of-the-art home for the School of Electrical and Computer Engineering.

-

Ignite alum Gallox Semiconductors joins Breakthrough Energy Fellows

Gallox Semiconductors, led by Jonathan McCandless, Ph.D. ’23, has been selected for the fourth cohort of the Breakthrough Energy Fellows, a group of entrepreneurs focusing on technology that reduce greenhouse gases.

-

University celebrates top faculty for outstanding teaching, mentoring

Eleven teaching faculty from across the university have been awarded Cornell’s highest honors for graduate and undergraduate teaching, Interim President Michael I. Kotlikoff announced Oct. 22.